Sample Chapter of My Ruby Book

(This is a sample chapter I put together to see how I felt about writing a programming book.)

The Simple Script

Before starting to build a more complicated program, let’s start with something simple. We’re going to hack together a short script that works. It will upload a single cat picture to a S3 bucket. We’ll learn the basics of both a ruby script and how cloud storage works.

Lesson setup

In your working directory create a new branch for this chapter. The -b switch tells git to create a new branch and switch to it.

$ git checkout -b chapter-01

Create a new file with the ruby header and set the permissions so that we can run it directly.

$ echo '#!/usr/bin/env ruby' > uploader.rb

$ chmod +x uploader.rb

This is the file that we’ll be using for this chapter. Go ahead and make a commit. This way you can start over at anytime.

$ git add uploader.rb

$ git commit -a -m "Starting ch. 1"

The -a -m switches tell git to commit all added files and use the message provided. It’s a shorthand you’ll see often.

Your working directory should look like this.

$ ls -l

total 16

-rw-r--r-- 1 jenn staff 360B Feb 14 11:46 README.md

-rwxr-xr-x 1 jenn staff 20B Feb 14 13:10 uploader.rb*

You can see our new file has the right permissions (the x in the permission listing) and is ready for editing.

Any time we add code and the script runs with no errors, feel free to make commit. I won’t bug you about it. But it’s good to save a version when the code works. Your workflow would look something like this:

- Edit & save file

- Run script

- Woohoo!

git commit -a

If the next edit breaks something and you need to go back to a working version, just check out that file again.

$ git checkout -- <broken_file>

You’ll lose your current changes, but you don’t care. It didn’t work anyway.

Chapter files

The finished files for this chapter are in the chapter-01 branch. To see them, change to your cloned directory and do a checkout.

$ git checkout chapter-01

The complete script is also at the end of this chapter.

S3 key safety

The Amazon keys are literally the keys to your jungle kingdom. They’re the same as a user name and password on any other site. It’s very important to keep them safe. Anyone who finds your keys can log onto your account.

If you followed the instructions in Getting Started, you’ll have a key that only works with S3. If you were to lose that key, whoever found it would have upload and download access to your buckets. The best outcome would be the deletion of all your files. The worst outcome would be to have your account filled with multiple gigabytes of illegal content. You would be on the hook for both financial and legal consequences.

Now, if you didn’t follow the directions and have a key that can access all of your Amazon services, stop. Flip back to Getting Started and create a key just for this project. A key thief with a full-access key could bankrupt you by creating a server cluster to farm bitcoins. That’s not a credit card bill I’d want.

The good news is that keys are easy to keep safe.

- Use keyfiles instead of putting them into the body of scripts.

- Don’t keep keyfiles in directories using version control.

If you don’t put them into the body of script, they won’t be accidentally uploaded. Code sharing is an important part of learning. At some point you’ll want to post a script to a forum or code hosting site (StackOverflow or GitHub, for example). There’s no worry about forgetting to take the keys out if you keep your them out of your scripts.

The other way a key can leak out into the world is via version control. A keyfile that gets included in a commit will always be recoverable. This is why we use version control. It lets us go back in time and recover deleted files. Once a keyfile is in the project, it will always be in the project. If you were to push the project to a GitHub repository, the key would go with it.

To repeat, don’t ever put keys into the body of a script, or in a directory using version control.

Key file

To keep our keys safe, we need a home for them. A hidden folder inside the home folder is a good place. Change to your home folder and create the new folder.

$ cd && mkdir .cloud-keys

The cd command with no arguments will always take you to the home folder. The && is a quick way to chain commands on one line. We’ll be using the .cloud-keys folder for the rest of the book.

$ nano .cloud-keys/aws-chapter-01.key

Once Nano opens up, add following code block and save. (Ctrl-X, then enter.) This function will be used to return our keys to the script. There are better ways to do this. We’ll explore those in the following chapter. This is simplest way to create a quick script that follows our safety rules.

def my_key( key )

aws_public_key = "your-aws-public-key-goes-here"

aws_secret_key = "your-aws-secret-key-goes-here"

if "secret" == key

return aws_secret_key

elsif "public" == key

return aws_public_key

end

end

Make sure to copy your S3 keys exactly. We’ll be using them in next section.

The quick and dirty way

To get everything working we’re going to make a simple script that just runs top to bottom. In ruby, everything is an object. That makes advanced programming very easy. It’s also overkill for what we’re doing. We can use the object-oriented nature of ruby without building an “OO” program. This way we can write clean code with fewer lines.

The goals for the finished script are:

- Get the file that’s listed on the command line.

- Create a connection to S3.

- Upload the cat picture.

When we’re done the file will be in the cloud!

Getting the file

Change back to your working directory.

$ cd ~/dev/ruby-book

Open the uploader.rb file in your text editor. If you’re new to command line editing keep using nano. I personally use vim and think it’s worth the time to learn. If you want a non-command line editor, TextWrangler is a good choice. Now we start editing.

Right underneath the #!/usr/bin/env ruby add this.

load File.expand_path('~/.cloud-keys/aws-chapter-01.key')

if ARGV.length != 1

exit(1)

end

The first line brings in the keyfile we made. Now we can use it just like we typed it in this file. The second line is checking to see how many “things” are on the command line after the file name. Right now we’re not concerned about what’s there. We only want to know if one thing is there. If there is not exactly one item, quit.

ARGV is a special global array that any part of the script can access. It’s an array, but it’s also an object. As an array object it has built-in mini-programs that we can use. These mini programs are called methods. Here we’re using the length method. The dot (.) tells ruby to look inside of an object. Here we’re telling ruby “look inside of the array ARGV for a method called length, run it and give us back the result.”

Now we know the length of ARGV. The != is the not-equal operator. It’s comparing the length of ARGV to the number one. We move the next step if it’s anything other than one. And the next step is to exit. So if there’s more than one file, the program quits. The (1) after the exit command means to tell whatever program that launched the script (usually the shell) something went wrong.

Go ahead and run the file. The ./ tells the shell to look in this folder, the one we’re working in, and run the named script.

$ ./uploader.rb

Then check the shell exit status.

$ echo $?

1

Nothing happened! Which is exactly the point. But notice the lonely 1. That’s our exit status. The $? is a special shell variable that holds the exit status of the last program ran. We knew the script would fail, now the shell does too.

Try the command this way.

$ ./uploader.rb not-a-real-file.png

$ echo $?

0

Now the exit status is zero. This means the program ran and exited successfully. Remember we’re not checking to see if not-a-real-file.png is real or not. We’re just checking that something was included on the command line.

With this working, we can move along to connecting to S3 and finally sending that file.

Get ready to connect

If you followed the directions in Getting Started you have the gem fog installed and tested. If not, it’s time to flip back and do that. This next line will give our little script the power to talk to the clouds. Add a blank line after the last end and add this.

require 'fog'

That’s all that’s needed.

To make our connection we need our keys. This next part will pull in the keys and create a connection to S3.

aws = Fog::Storage.new(

{

:provider => 'AWS',

:aws_access_key_id => my_key('public'),

:aws_secret_access_key => my_key('secret')

}

)

We’ve created a Fog object in the variable aws. To make the connection we had to give it our keys along with the name of our storage provider. Save the file and run the program again.

$ ./uploader.rb not-a-real-file.png

$ echo $?

0

Pretty simple, eh? We also got back a zero, meaning that everything worked like it was supposed to.

Did you notice the slight hesitation? That was Fog loading everything up. It’s getting ready to log into your S3 account. But we haven’t told it to do anything yet.

Before moving on, lets go through the code we just added.

aws = Fog::Storage.new(

This is called the constructor. The gem Fog is being told we want it to make a new connection to storage (as opposed to other cloud services like compute, DNS, or CDN). Then it wraps all this up in our aws variable so we can use it in the next part of the script. There’s a lot happening behind the scenes, which is why we like writing scripts in ruby!

The next three lines are related. They provide the who and where that Fog needs to make a connection.

:provider => 'AWS',

This is how Fog knows where to connect to. AWS is predefined by Fog and is a shorthand for all the details to connect to Amazon Web Services. The next two lines take care of the who.

:aws_access_key_id => my_key('public'),

:aws_secret_access_key => my_key('secret')

These two lines are us being careful with our keys. We don’t have to worry about accidentally uploading or emailing our keys to someone if we share this script. When the my_key function is called your S3 keys are given to Fog.

The who and where are provided to Fog in a hash. Which is a ruby data type that lets us store different kinds of information all in one group. Hashes use curly braces {} to hold keys and values. (“Key” can also be thought of as an index.) The key is on the left, value on the right, always as a pair with the <= between them. The key can be named anything and will point to the value. This is great for getting data out when you know the key. It’s not so good when you need to keep data in a certain order. Arrays work better for that.

Fog expects certain names. That’s why we’re using provider, aws_access_key_id, and aws_secret_access_key.

Now we get to the fun part, sending cat pictures across the Internet!

Connecting

We need a location for our file. These are our S3 buckets. To keep this quick we’ll put the name of the bucket into the file. We’ll update this later so we can change the bucket on the fly.

You should have created the ruby-book bucket back in Getting Started. Add this to our script.

bucket = aws.directories.get('ruby-book')

We’re telling our aws object to find our bucket and load it into bucket so we can use it.

Save and give it a quick test.

$ ./uploader.rb not-a-real-file.png

$ echo $?

0

No errors, very nice. With that command we needed to talk to S3. If you have an error, it’s most likely you with your keys. Key errors look like this:

expects.rb:6:in `response_call': Expected(200) <=> Actual(403 Forbidden)

That’s a web server error code (403) meaning that you don’t have the right key for what you’re trying to get access to. Double check your .cloud-keys/aws-chapter-01.key for errors.

Make sure you can run the code so far, we don’t want log in errors while we’re trying to upload. Once your code runs cleanly, take a break.

Congratulations! You’ve come a long ways. So far, you’ve learned:

- How to create an executable file.

- How to run a script you’ve written.

- A few git commands.

- How to read command line variables.

- How to check shell exit codes.

- How to load S3 keys from an external file.

- How to connect to an S3 bucket.

Not bad for one chapter, eh? But there’s more!

Uploading

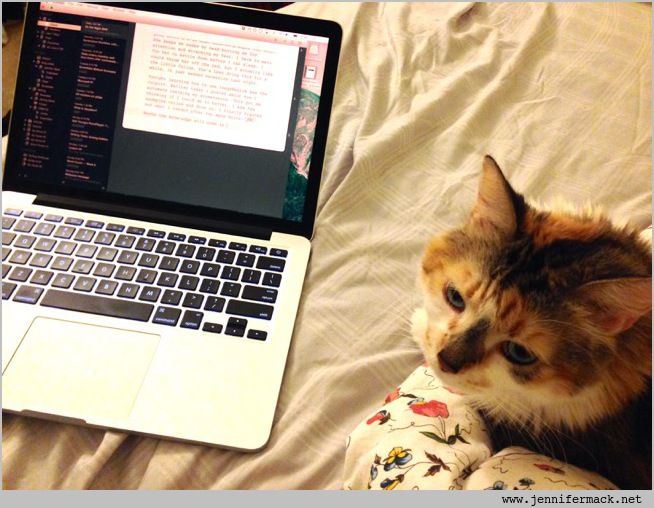

Until now, we’ve been using the nonexistent file not-a-real-file.png as a placeholder. We didn’t have a cat picture in our project folder before. We’ll fix that now. If you don’t have a cat picture to use for the rest of this chapter, you can use this one.

I have it saved as mac-cat.jpg. That will be the name I’ll use in the rest of the examples. It should be saved into your project folder.

Let’s keep working on our script by adding this line.

cat = File.expand_path(ARGV[0])

Here, we’re loaded up the variable cat with the full path to our picture. Which just happens to be the first thing (element 0) in our list of command line files.

We also need to get the name of the picture. When we’re working out of the same folder, ARGV[0] will be the name without path information. So we could just use it again.

But if we wanted another file that’s on the desktop, it would crash.

$ ./uploader.rb ~/Desktop/other-cat.jpg

So to get the filename we just ask ruby to do the work for us.

catname = File.basename(cat)

This just chops of the filename from the path and saves it to catname. We now have a cat picture, a cat picture file path, and a cat picture filename. And that’s all we need to get on with the uploading.

The last bit of our script creates the file in our S3 bucket, and then uploads the file contents. You have to name the cat before you can fill it full of feline goodness.

bucket.files.create(

{

:key => catname,

:body => File.read(cat),

:public => true

}

)

This creates the file. :key will be the name in the S3 bucket. :body is the contents of the file. We’re telling Fog to read the file from our computer, then send it to S3. The last part, :public makes the file visible to the world. If you leave out the last part (or set it to false), people will see a 403 Forbidden error instead of your cat. Which would defeat the entire purpose of the Internet.

$ ./uploader.rb mac-cat.jpg

$ echo $?

0

With no errors and a successful exit code, we know our cat is safe in S3’s loving embrace. But if you want to check, you can see my upload at this link:

https://s3.amazonaws.com/ruby-book/mac-cat.jpg

Can you believe you just wrote a script that uploads a file to S3 and it only took 30 lines of code?

Kinda cool, eh?

Now that we have a working base, the upcoming chapters will expand on this code. We’ll add error checking, default options, better key storage, and multiple file uploads.

Keep reading, the good stuff awaits!

Final script

#!/usr/bin/env ruby

load File.expand_path('~/.cloud-keys/aws-chapter-01.key')

if ARGV.length != 1

exit(1)

end

require 'fog'

aws = Fog::Storage.new(

{

:provider => 'AWS',

:aws_access_key_id => my_key('public'),

:aws_secret_access_key => my_key('secret')

}

)

bucket = aws.directories.get('ruby-book')

cat = File.expand_path(ARGV[0])

catname = File.basename(cat)

bucket.files.create(

{

:key => catname,

:body => File.read(cat),

:public => true

}

)